v0.5.12 released, and lots of documentation fixes

Today I got a fire lit under me and decided to do a bunch of bug fixing and general performance improvements.

Release notes

Today I got a fire lit under me and decided to do a bunch of bug fixing and general performance improvements.

Changes since v0.5.11:

- Fixed a micro-optimization which was causing some pretty bad cache problems (I really should write a blog entry about this but tl;dr micro-optimizations are usually bugs in disguise)

- Fixed an issue which was causing the page render cache to not actually activate most of the time (you know there’s going to be a ramble about this below…)

- Fixed a bunch of spurious log meessages about nested transactions

- Refactored the way that

markup=Falseworks, making it available from all Markdown/HTML contexts - Changed

no_smartquotes=Truetosmartquotes=False(no_smartquotesis retained for template compatibility) (although I missed this onentry.title; I’ve already committed a fix for the next version) - Improve the way that the page render cache interacts with templates

- Fixed an issue where changing a template might cause issues to occur until the cache expires

Documentation improvements

- The Apache/nginx deployment guide is vastly improved:

- Now it uses UNIX domain sockets instead of localhost ports, making service provisioning a bit easier

- The systemd unit is now a user unit instead of a system unit, which improves security and also allows for gentler service restarts

- The git deployment guide has been updated per the above, and also some of the code snippets are cleaned up

- The information about HTML processing and image renditions has been consolidated and cleaned up

- Information about private posts and user configuration has also been cleaned up somewhat

- Also lots of updates to the beesbuzz.biz Publ templates

The caching stuff

So, once upon a time, the page render cache was caching at the response level, rather than the render level, which seemed like a good idea at the time. But then I realized this was bad, and made it so that if the request was coming from a browser that could potentially return a not modified response, this would break things badly. So, in that situation it just turned the render cache off.

This of course had the silly side effect of making the rendition cache not active in precisely the situation when it should most be active!

Later I had refactored the rendition cache to cache at the render level, with the request routing and response (which are cheap) always evaluated and only the page render itself would be cached. But I forgot to remove the check above.

So, all this time, the caching system was only being used for caching… stuff that didn’t really benefit from being cached. Like low-level file lookups, which aren’t exactly a performance hog (and could lead to rather unfortunate issues with template locations being out-of-date until cache expiry took place).

Anyway, after getting the cache to actually work, I also realized there were a few things I could do to make stale cached renditions no longer linger. Previously, the cache key that’s generated for a rendition just involved (essentially) the file paths of the relevant items in the URL; category templates would know about the template’s file path and the category path, and entry templates would additionally know about the entry ID, and then at a global level it would also know about the request’s base URL (so it would cache different hostnames and schemes differently, which also had the nice side-effect of eliminating key conflicts if two sites were configured with the same memcached key prefix but I digress).

Well, first I realized it was pretty trivial to have entries and templates express their file fingerprint as part of their cache key, so changes to templates and entries would cause immediate cache misses – meaning instant updates on the next page load. But this would only apply to content updates on entry pages, not on category pages.

So I started to go down a rabbit hole where updates to entries would also update the cache key for the category itself, which caused indexing to take a lot more time and also required storing metadata about all categories (and not just ones with configuration metadata) in the database, and this had a few other annoying side-effects (meaning bugs) that had to be ironed out. And it still wouldn’t help to update category pages which change due to an update to an entry in a different category.

Then I realized that the easiest thing to do would be to have the latest file modification be part of the cache key; any content file update would then basically invalidate the entire page render cache. Given that most sites only update very infrequently this seemed like a nice tradeoff. So I started implementing that…

…and then realized that in the early days of me adding caching to Publ, I had already implemented that since I thought it would be useful, and it was just not being used at all! (And I had even touched this code when I was adding mypy annotations to everything, but didn’t even think about it…)

So, now a bit of functionality that’s been there has theoretically made the rendition cache a lot faster, even around site resets. Neat.

In any case, after all this work I decided to do some benchmarking. I used bench to time rendering the Publ tests index page, and the results were interesting:

No cache

time 90.92 ms (85.06 ms .. 97.29 ms) 0.993 R² (0.985 R² .. 1.000 R²) mean 87.33 ms (86.27 ms .. 90.70 ms) std dev 2.868 ms (968.0 μs .. 4.970 ms)

SimpleCache (in-process object store)

time 37.22 ms (36.19 ms .. 38.11 ms) 0.999 R² (0.998 R² .. 1.000 R²) mean 38.10 ms (37.39 ms .. 40.58 ms) std dev 2.433 ms (469.3 μs .. 4.620 ms) variance introduced by outliers: 19% (moderately inflated)

MemcacheD

time 38.38 ms (37.95 ms .. 39.06 ms) 0.999 R² (0.999 R² .. 1.000 R²) mean 38.21 ms (37.92 ms .. 38.51 ms) std dev 570.3 μs (428.0 μs .. 762.1 μs)

So, at least on that fairly simple test, the tests index page runs about 2x faster with a cache present than without. (MemcacheD is a little slower than SimpleCache, but that’s to be expected, as it has to serialize/deserialize objects over the network. Frankly I’m surprised it’s only that small of a difference!)

Then I decided to benchmark the main page of my personal website, which is rather more complicated. Running locally I got these results:

No cache

time 280.0 ms (274.8 ms .. 284.9 ms) 1.000 R² (0.999 R² .. 1.000 R²) mean 278.0 ms (277.1 ms .. 279.7 ms) std dev 1.548 ms (749.5 μs .. 2.023 ms) variance introduced by outliers: 16% (moderately inflated)

SimpleCache

time 19.32 ms (19.19 ms .. 19.42 ms) 1.000 R² (1.000 R² .. 1.000 R²) mean 19.28 ms (19.21 ms .. 19.38 ms) std dev 201.5 μs (138.9 μs .. 289.7 μs)

MemcacheD

time 20.85 ms (20.62 ms .. 21.13 ms) 0.999 R² (0.998 R² .. 1.000 R²) mean 20.57 ms (20.44 ms .. 20.74 ms) std dev 341.0 μs (254.2 μs .. 511.7 μs)

So, yeah, 14x faster… And my site feels way more responsive now, too, at least when Pushl isn’t thrashing the cache.

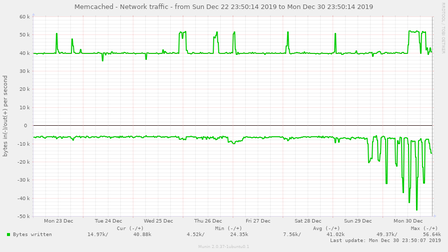

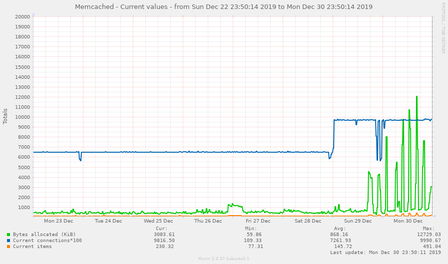

Time will tell just how much of a difference this makes in practical terms; I’ve had munin monitoring my MemcacheD for a while and the graphs made it look like it was pretty effective but it was of course not actually monitoring anything useful. But here’s some graphs of the last week:

In a week or so I’ll see what they’re like and if there’s any difference. I’m also just realizing that my “HTTP load time” graph isn’t actually very useful so I need to configure Munin more appropriately.

I’m also not entirely sure what those semi-regular spikes in MemcacheD traffic have been; it’s unfortunately not easy to tell what individual things are using MemcacheD since it’s just a big ol' global key-value store, more or less.